We’ve just posted a paper titled “Effective statistical physics of Anosov systems” that details the physical relevance of the techniques we’ve used to characterize network traffic. The idea is that there appears to be a unique well-defined effective temperature (and energy spectrum) for physical systems that are typical under the so-called chaotic hypothesis. We’ve demonstrated how statistical physics can be used to detect malicious or otherwise anomalous network traffic in another whitepaper also available on the arxiv through our downloads page. The current paper completes the circle and presents evidence indicating that the same ideas can be fruitfully applied to nonequilbrium steady states.

Random bits

23 April 2010“in [Richard Clarke’s] Cyberwar, like in real war, truth is the first casualty”

Cyberdeterrence through tattlling? This is ridiculous. Not bloody likely that will work against serious hackers. And not bloody likely that it would be done in cases where potentially state-sponsored hackers were caught.

Equilibrium Networks beta

19 March 2010Our visual network traffic monitoring software (for background information, see our website) has successfully passed our internal tests, so we are packaging a Linux-oriented beta distribution that is planned for snail-mailing (no downloads–sorry, but export regulations still apply) on a limited basis before the end of the month. The beta includes premium features that will not be available with our planned free/open-source distribution later this year, but at this early stage we will be happy to provide a special license free of charge to a limited number of qualifying US organizations.

Participants in our beta program will be expected to provide timely and useful feedback on the software, e.g.

• filling perceived gaps in documentation

• proposing and/or implementing improvements

• making feature requests or providing constructive criticism

• providing testimonial blurbs or case studies

• etc.

The software should be able to run in its entirely on a dedicated x86 workstation with four or more cores and a network tap (though you may prefer to try out distributed hardware configurations). If your organization is interested in participating in our beta program, please include a sentence or two describing your anticipated use of this visual network traffic monitoring software along with your organizational background, POC and a physical address in an email to beta [at our domain name]. DVDs will only be mailed once you’ve accepted the EULA. Bear in mind that beta slots are limited. Enjoy!

Martingales from finite Markov processes, part 1

15 February 2010In an earlier series of posts the emerging inhomogeneous Poissonian nature of network traffic was detailed. One implication of this trend is that not only network flows but also individual packets will be increasingly well described by Markov processes of various sorts. At EQ, we use some ideas from the edifice of information theory and the renormalization group to provide a mathematical infrastructure for viewing network traffic as (e.g.) realizations of inhomogeneous finite Markov processes (or countable Markov processes with something akin to a finite universal cover). An essentially equation-free (but idea-heavy) overview of this is given in our whitepaper “Scalable visual traffic analysis”, and more details and examples will be presented over time.

The question for now is, once you’ve got a finite Markov process, what do you do with it? There are some obvious things. For example, you could apply a Chebyshev-type inequality to detect when the traffic parameters change or the underlying assumptions break down (which, if the model is halfway decent, by definition indicates something interesting is going on–even if it’s not malicious). This idea has been around in network security at least since Denning’s 1986-7 intrusion detection article, though, so it’s not likely to bear any more fruit (assuming it ever did). A better idea is to construct and exploit martingales. One way to do this to advantage starting with an inhomogeneous Poisson process (or in principle, at least, more general one-dimensional point processes) was outlined here and here.

Probably the most well-known general technique for constructing martingales from Markov processes is the Dynkin formula. Although we don’t use this formula at present (after having done a lot of tinkering and evaluation), a more general result similar to it will help us introduce the Girsanov theorem for finite Markov processes and thereby one of the tools we’ve developed for detecting changes in network traffic patterns.

The sketch below of a fairly general version of this formula for finite processes is adapted from a preprint of Ford (see Rogers and Williams IV.20 for a more sophisticated treatment).

Consider a time-inhomogeneous Markov process on a finite state space. Let

denote the generator, and let

denote the corresponding transition kernel, i.e.

where the Markov propagator is

and indicates the formal adjoint or reverse time-ordering operator. Thus, e.g., an initial distribution

is propagated as

(NB. Kleinrock‘s queueing theory book omits the time-ordering, which is a no-no.)

Let be bounded and such that the map

is

Write

and

Now

and the Markov property gives that

The notation just indicates the history of the process (i.e., its natural filtration) at time

The transition kernel satisfies a generalization of the time-homogeneous formula

so the RHS of the previous equation is times

plus a term that vanishes in the limit of vanishing mesh. The fact that the row sums of a generator are identically zero has been used to simplify the result.

Summing over and taking the limit as the mesh of the the partition goes to zero shows that

That is,

is a local martingale, or if is well behaved, a martingale.

This can be generalized (see Rogers and Williams IV.21 and note that the extension to inhomogeneous processes is trivial): if is an inhomogeneous Markov process on a finite state space

and

is such that

is locally bounded and previsible and

for all

then

given by

is a local martingale. Conversely, any local martingale null at 0 can be represented in this form for some satisfying the conditions above (except possibly local boundedness).

To reiterate, this result will be used to help introduce the Girsanov theorem for finite Markov processes in a future post, and later on we’ll also show how Girsanov can be used to arrive at a genuinely simple, scalable likelihood ratio test for identifying changes in network traffic patterns.

The Clinton doctrine

25 January 2010After the fallout from Aurora, US Secretary of State Hillary Clinton gave a major speech last Thursday at the Newseum in DC. Highlights below:

The spread of information networks is forming a new nervous system for our planet…in many respects, information has never been so free…[but] modern information networks and the technologies they support can be harnessed for good or for ill…

There are many other networks in the world. Some aid in the movement of people or resources, and some facilitate exchanges between individuals with the same work or interests. But the internet is a network that magnifies the power and potential of all others. And that’s why we believe it’s critical that its users are assured certain basic freedoms. Freedom of expression is first among them…

…a new information curtain is descending across much of the world…

Governments and citizens must have confidence that the networks at the core of their national security and economic prosperity are safe and resilient…Disruptions in these systems demand a coordinated response by all governments, the private sector, and the international community. We need more tools to help law enforcement agencies cooperate across jurisdictions when criminal hackers and organized crime syndicates attack networks for financial gain…

States, terrorists, and those who would act as their proxies must know that the United States will protect our networks. Those who disrupt the free flow of information in our society or any other pose a threat to our economy, our government, and our civil society. Countries or individuals that engage in cyber attacks should face consequences and international condemnation. In an internet-connected world, an attack on one nation’s networks can be an attack on all [ed. see article 5 of the North Atlantic Treaty]. And by reinforcing that message, we can create norms of behavior among states and encourage respect for the global networked commons.

China denies everything and is trying to change the subject.

The tone of this speech was remarkable. While it is natural to expect that most nations conduct offensive computer network operations against foreign governments and organizations, getting publicly called on it is rare. Most observers have no doubt that the PRC has been infiltrating and attacking US government and commercial networks for strategic ends, and the NSA would not be doing its job if it were not doing the same thing abroad. So even if everything isn’t Marquis of Queensberry you wouldn’t expect to see folks complain too loudly.

But human rights and censorship is another story. There is a simple reason why Cold War rhetoric was recycled in this speech. Regardless of whether Google capitulates or leaves China (any other outcome is unlikely), by going public instead of leaking to the press they have put the PRC on the defensive. As I remarked earlier, Google surely must have known it had the (at least implicit) backing of the US before it (effectively) named names. The administration must have seen this as a golden opportunity to seize the moral high ground. When force of arms cannot be decisive, the justness of a cause still might be.

China and Google

14 January 2010Time for the (n+1)th dissection of Google’s recent announcement concerning cyberattacks and censorship. (You’ve got to love recursion!)

As Galrahn points out, discounting Google’s market share relative to Baidu isn’t really sensible. They’ve got a lot of market share there, especially for non-search services without strong competitors—but many of these services (YouTube, Picasa, and often Blogger) have been blocked by the Chinese government. That speaks to two things in China: an opportunity for user base consolidation and to a governmental approach to information that is inimical to Google’s business model. More to the point:

For what amounts to only 2% of revenue, Google is threatening to disrupt the internet behavior of at minimum 118 million internet savvy Chinese and believes that fact alone has value in negotiations.

Source: http://www.flickr.com/photos/dong/4271035989/ / CC BY 2.0

Is this really a funeral, or will a hundred flowers blossom?

That is, Google is using a casus belli to force an issue that predates their entry into the Chinese market. It doesn’t cost them much to do so. They’ve already got the explicit backing of some other heavyweight Western companies (e.g., Yahoo) and network effects may induce many others to climb on board the bandwagon. They surely have the implicit backing of the US government in pushing back against China (and am I the only one who is thinking about the possibility of honeypots here? No way).

The bottom line is that this is not about a moral stand. By taking things public, Google is creating a negotiating opportunity for what it’s wanted all along from China. The real issue here is not who is “right” or “wrong” but who is going to win. For Google to thrive in China, the Chinese Communist Party’s control over information has to be weakened. For the CCP to thrive in China, it has to retain a monopoly on political power, and this requires controlling the flow of information. Moreover, and as I’ve mentioned before, there is a clear path from China’s cyber strategy to the foundations of its politics. So Google will probably not win much if anything in this skirmish.

The larger point is much more interesting, though. After a decade of undeclared cyber war with Chinese characteristics, this is the first overt public response. China has less to lose from cyberwarfare than the West does. But as it finds what it’s looking for with rampant cyberespionage, China may also find that it is hurting itself.

Common ecology quantifies human insurgency

21 December 2009Researchers in Colombia, Miami, and the UK have published an article in this week’s Nature that claims to identify what amounts to universal power-law behavior (though they don’t call it that, and there are slightly different exponents for different insurgencies, but the putative universal exponent is apparently 5/2) in insurgencies. The researchers analyzed over 54000 violent events across nine insurgencies, including Iraq and Afghanistan. They find that the power-law behavior of casualties (see also here for the distribution of exponents over insurgencies) is explained by “ongoing group dynamics within the insurgent population” and that the timing of events is governed by “group decision-making about when to attack based on competition for media attention”.

Their model is not predictive in any practical sense: few things with power laws are. What it provides is a quantitative framework for understanding insurgency in general, and perhaps more importantly a path towards classifying insurgencies based on a set of quantitative characteristics. One of the nice things about universality (if this is really what is going on) is that it allows you to ignore dynamical details in a defensible way, so long as you understand the basic mechanisms at play. This insight actually derives from the renormalization group (the same one that informs Equilibrium’s architecture) and provides a way to categorize systems. So if there really is universal behavior, then the fact that the model these researchers use is just a cariacture wouldn’t matter as much as it otherwise would, and it would allow for reasonably serious quantitative analysis.

The first question about this work ought to be if similar results can be obtained with different model assumptions. The second ought to be attempting to run the same analysis on “successful” wars of national liberation to see if there are indeed distinguishing characteristics. If there are, this framework could be a valuable input to policy and strategy. When pundits talk about Iraq or Afghanistan being another Vietnam, the distinction between terrorist insurgency and guerrilla warfare is blurred. But hard data may provide clarity in the future.

The chimera of cyberdeterrence

8 December 2009One thing I’ve heard a lot of people talk about recently is the need to develop good theories of cyberdeterrence. It’s making the think tank rounds and what not. But the basic assumptions that cyberdeterrence is needed, or doesn’t exist, etc. aren’t obvious to me.

Let’s take the PRC as a case in point. Based on a lot of pretty strong and publicly discussed circumstantial evidence, it seems reasonable to assume that the PRC is constantly attacking US computer networks, conducting industrial and governmental espionage and laying the groundwork for damaging cyberattacks in the event of hostilities. Lots of people are spending a lot of time, effort, and money to try to mitigate the attacks that are already occurring, and especially the ones that have not yet occurred. And all of these people, myself included, are convinced that we are and will continue to be behind the curve. Since it seems like so many people like to arrogate the terminology of Cold War standoff, I will follow suit and say that the best we can (or should try to) do is “containment”. [1]

This is a fundamental issue in security—not just information security. Professionals mitigate risk and concern themselves with threats, not vulnerabilities. Attacks will inevitably happen. Some will be more successful than others. The point is to work to avoid the most serious, probable, and predictable ones, while trying to detect all attacks and mitigate their effects—that is, to contain attacks. Addressing threats dictates the nature of security approaches, deployments and technologies. And while it is fundamentally defensive in nature, it acts as a deterrent in its own right. Fewer businesses are physically robbed because there are video cameras and silent alarms when it makes sense to have them, and everybody knows it. Fewer individuals attempt serious attacks on DoD because they know people are watching, and getting caught means they’ll (get extradited and) go to prison. And so on.

Containment in the sort of sense indicated above (or in the original sense intended by Kennan and [mis]appropriated by the wider defense intellectual community) is a form of deterrence. It also relies on more overt, less subtle forms of deterrence (read: the threat of overwhelming force, or containment à la Nitze) in order to be effective. But we have that anyway in our military.

As I’ve suggested elsewhere, the PRC may very well be using cyberattacks to deter conventional attacks:

the PRC is already deterring the US by its apparent low-level attacks. These attacks demonstrate a capability of someone in no uncertain terms and in fact may be a cornerstone of the PLA’s overall deterrence strategy. In short, if the PLA convinces US leadership that it can (at least) throw a monkey wrench in US deployments, suddenly the PRC has more leverage over Taiwan, where the PLA would need to mount a quick amphibious operation. And because it’s possible to view the Chinese Communist Party’s claim to legitimacy as deriving first of all from its vow to reunite China (i.e., retake the “renegade province” of Taiwan) one day, there is a clear path from the PLA cyber strategy to the foundations of Chinese politics…The PLA has concluded that cyber attacks focusing on C2 and logistics would buy it time, and presumably enough time (in its calculations) to achieve its strategic aims during a conflict. This strategy requires laying a foundation, and thus the PRC is presumably penetrating networks: not just for government and industrial espionage, but also to make its central war plan credible.

The US, on the other hand, can clearly deter serious cyberattacks through its conventional military, not least because serious cyberattacks will be paired with kinetic attacks, and attribution won’t be a problem. I’ve talked about this elsewhere and won’t belabor it here.

But the idea that we should more actively deter cyberattacks using cyber methods is out there. It is based on unrealistic technological assumptions, but more importantly it’s fundamentally wrong. It doesn’t make sense from the point of view of political or military objectives. The US wouldn’t gain anything from a cyberdeterrent: it treats cyber as a strategic capability, and wouldn’t use it just to deter the sorts of cyberattacks that it faces now. And the PRC wouldn’t use any more of its presumptive cyber capability than the bare minimum required for the PLA’s purposes—and note that the likely PLA strategy would also require a powerful reserve (but not in the sense of “second-strike”) capability.

If cyberdeterrence is supposed to mean deterring cyberattacks using cyber methods, we’re better off without it. If cyberdeterrence means just about anything else, we’ve either already got it or have already decided against it.

[1] Containment, as originally intended by Kennan, was not a strategy of constant military opposition. Kennan did not believe that the USSR was a grave military threat to the US (or to Western Europe), and went to some lengths to clarify this point in his later years, but he very much believed that the USSR was an entity that needed to be opposed. Its influence needed to be contained so that it could not gain ground in Europe through political and economic means: these were the Soviets’ preferred avenues for expansion.

Although the USSR possessed a tremendously powerful military machine at the end of World War II, the US held a clear strategic advantage at the time of the long telegram, and until the Soviets had more than a handful of atomic bombs, they did not have the minimum means of reprisal to counter a US attack. It was only decades later that the USSR presented any direct military threat to the United States homeland. It’s important to remember that not only was NATO always intended to demonstrate American commitment to Europe through placing troops as hostages to a Soviet strike, but that the demonstration was as much (if not more) for the benefit of the Europeans as for the Soviets.

In short, the strategy of containment was not originally intended as a justification for a colossal military counterweight to the USSR, but as justification for a clear commitment to providing a viable political and economic alternative—backed up by force, but not based on the threat of its use. Instead the threat became the message.

Birds on a wire and the Ising model

30 November 2009Statistical physics is very good at describing lots of physical systems, but one of the basic tenets underlying our technology is that statistical physics is also a good framework for describing computer network traffic. Lots of recent work by lots of people has focused on applying statistical physics to nontraditional areas: behavioral economics, link analysis (what the physicists abusively call network theory), automobile traffic, etc.

In this post I’m going to talk about a way in which one of the simplest models from statistical physics might inform group dynamics in birds (and probably even people in similar situations). As far as I know, the experiment hasn’t been done–the closest work to it seems to be on flocking (though I’ll give $.50 and a Sprite to the first person to point out a direct reference to this sort of thing). I’ve been kicking it around for years and I think that at varying scopes and levels of complexity, it might constitute anything from a really good high school science fair project to a PhD dissertation. In fact I may decide to run with this idea myself some day, and I hope that anyone else out there who wants to do the same will let me know.

The basic idea is simple. But first let me show you a couple of pictures.

Notice how the tree in the picture above looks? There doesn’t seem to be any wind. But I bet that either the birds flocked to the wire together or there was at least a breeze when the picture below was taken:

Because the birds are on wires, they can face in essentially one of two directions. In the first picture it looks very close to a 60%-40% split, with most of the roughly 60 birds facing left. In the second picture, 14 birds are facing right and only one is facing left.

Now let me show you an equation:

If you are a physicist you already know that this is the Hamiltonian for the spin-1/2 Ising model with an applied field, but I will explain this briefly. The Hamiltonian is really just a fancy word for energy. It is the energy of a model (notionally magnetic) system in which spins

that occupy sites that are (typically) on a lattice (e.g., a one-dimensional lattice of equally spaced points) take the values

and can be taken as caricatures of dipoles. The notation

indicates that the first sum is taken over nearest neighbors in the lattice: the spins interact, but only with their neighbors, and the strength of this interaction is reflected in the exchange energy

The strength of the spins’ interaction with an applied (again notionally magnetic) field is governed by the field strength

This is the archetype of spin models in statistical physics, and it won’t serve much for me to reproduce a discussion that can be found many other places (you may like to refer to Goldenfeld’s Lectures on Phase Transitions and the Renormalization Group, which also covers the the renormalization group method that inspires the data reduction techniques used in our software). Suffice it to say that these sorts of models comprise a vast field of study and already have an enormous number of applications in lots of different areas.

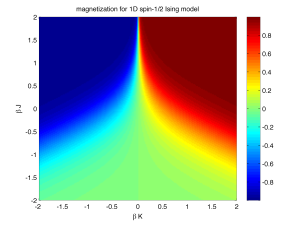

Now let me talk about what the pictures and the model have in common. The (local or global) average spin is called the magnetization. Ignoring an arbitrary sign, in the first picture the magnetization is roughly 0.2, and in the second it’s about 0.87. The 1D spin-1/2 Ising model is famous for exhibiting a simple phase transition in magnetization: indeed, the expected value of the magnetization for in the thermodynamic limit is shown in every introductory statistical physics course worth the name to be

where is the inverse temperature (in natural units). As ever, a picture is worth a thousand words:

For and

it’s easy to see that

But if

and

, then taking the subsequent limit

yields a magnetization of

At zero temperature the model becomes completely magnetized–i.e., totally ordered. (Finite-temperature phase transitions in magnetization in the real world are of paramount importance for superconductivity.)

And at long last, here’s the point. I am willing to bet ($.50 and a Sprite, as usual) that the arrangement of birds on wires can be well described by a simple spin model, and probably the spin-1/2 Ising model provided that the spacing between birds isn’t too wide. I expect that the same model with varying parameters works for many–or even most or all–species in some regime, which is a bet on a particularly strong kind of universality. Neglecting spacing between birds, I expect the effective exchange strength to depend on the species of bird, and the effective applied field to depend on the wind speed and angle, and possibly the sun’s relative location (and probably a transient to model the effects of arriving on the wire in a flock). I don’t have any firm suspicions on what might govern an effective temperature here, but I wouldn’t be surprised to see something that could be well described by Kawasaki or Glauber dynamics for spin flips: that is, I reckon that–as usual–it’s necessary to take timescales into account in order to unambiguously assign a formal or effective temperature (if the birds effectively stay still, then dynamics aren’t relevant and the temperature should be regarded as being already accounted for in the exchange and field parameters). I used to think about doing this kind of experiment using tagged photographs or their ilk near windsocks or something similar, but I can’t see how to get any decent results that way without more effort than a direct experiment. I think it probably ought to be done (at least initially) in a controlled environment.

Anyways, there it is. The experiment always wins, but I have a hunch how it would turn out.

UPDATE 30 Jan 2010: Somebody had another interesting idea involving birds on wires.

Posted by eqnets

Posted by eqnets

MIRCON and network counteroffensives

13 October 2010I popped in for a couple of stretches at Mandiant’s MIRcon incident response conference today and yesterday and was struck by a panel discussion on Tuesday about defenders going on offense. The gist was half a) it’s of dubious legality and wisdom and half b) you’ve got be an expert to do it properly. Now politics and economics being what they are, a) will ultimately be irrelevant without a prohibition and b) will govern the dynamics.

I recalled Mandiant’s model: they have a bunch of people constantly working on highly technical stuff in a field that changes rapidly—this level of expertise requires economies of scale. The same is true for black hat hackers: economy of scale drives the less skilled to leverage off-the-shelf capabilities, and it drives the more highly skilled to collaborate on the most demanding projects.

Because defense costs more than offense, “offensors” could benefit from the same economies of scale. I can imagine a future in which people not only pay for but subscribe to offense as a service, where a group of (nominally) white hatters have their own organizations that do nothing but attack designated black hatters, thereby raising the costs of doing malicious business. The economics might work for the white hatters in much the same way it does for insurance companies, and the product would not be entirely dissimilar. If this sort of activity were tolerated by authorities it might often be preferred by many hackers over black hatting, even if the latter gave bigger paychecks. This could further affect the economics in a good way.

If it will make sense for corporations to go on network counteroffensives themselves, it will make more sense for them to outsource that role if they possibly can. And they might end up being able to.